This from NEJM:

“However, it is unclear whether current rating systems are meeting stakeholders’ needs. Such rating systems frequently publish conflicting ratings: Hospitals rated highly on one publicly reported hospital quality system are often rated poorly on another. This provides conflicting information for patients seeking care and for hospitals attempting to use the data to identify real targets for improvement.

However, to our knowledge, there has been no prior systematic review or evaluation of current rating systems that could help inform patients, clinicians, and policymakers of the various systems’ methodologies, strengths, and weaknesses.”

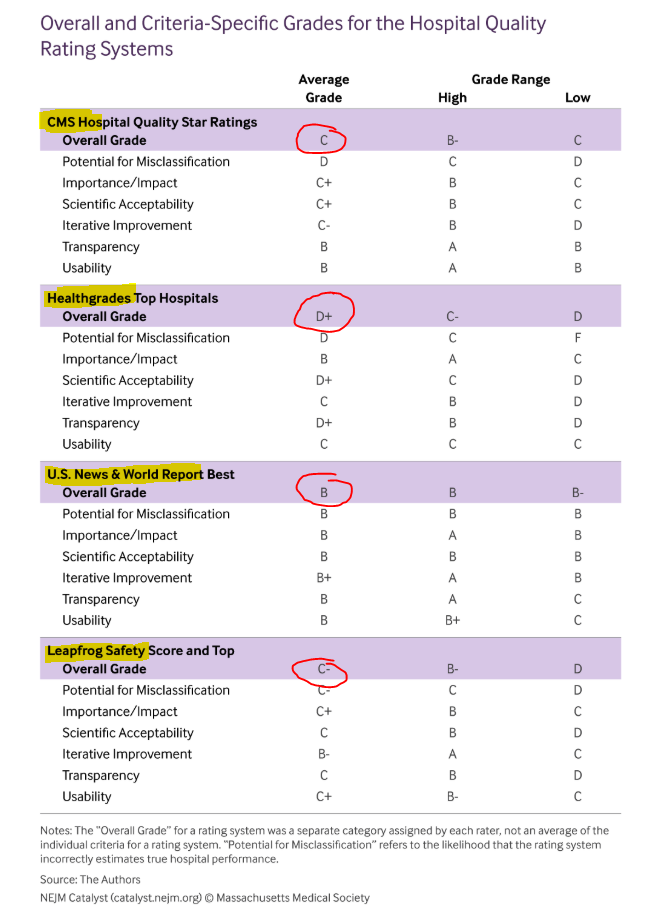

CMS Hospital Compare Overall Star Ratings, Healthgrades Top Hospitals, Leapfrog Safety Grade and Top Hospitals, and U.S. News & World Report Best Hospitals.

Note the disparity in the table below and the designs used by each organization. Also, note, each submitting hospital utilizes different approaches to coding and reporting–the inputs, and thus, risk adjustment may increase (or decrease) their odds of coming out on top; reporting adverse events to regulators (or not) does the same, and comparing each hospital’s typical population mix (high versus low SES) can be an apples to oranges over an apples to apple game.

Look at the circles in red. Go with USNWR, and you are sailing smooth. But oh wait, Healthgrades says avoid that same facility at all costs. A grown-up size problem and whose methods and grades do you trust?

Evaluation science has a long way to go—and this is just one of many examples. There are too many inaccuracies and unaccounted for reporting domains (“what does the patient say and how are they doing,” for one). One wonders how useful these kinds of assessments are in our present metric milieu—aside from which, do patients use them and prioritize their findings over what their family or PCP says. Most of the studies we have today are a mixed bag.

I would also add, some everyday rating sites like Yelp can approximate what the big boys mentioned above do. Wisdom of the crowd type stuff and who knows what the public will see a decade from now.

Also, note the comments at the bottom of the piece. LOTS of pushback.

Here is a sample of why we things are amiss in rating land:

Unfortunately, these administrative data, collected for billing rather than clinical purposes, have notable, well-described shortcomings. The data used are generally limited to those 65 and older who participate in the Medicare Fee-for-Service program. The data often lack adequate granularity to produce valid risk adjustment. Moreover, outcomes reported in administrative data have been shown to have high false-negative and false-positive rates. There are also notable ascertainment or surveillance bias issues that invalidate some of these measures (e.g., the PSI-12 VTE outcome measure).

Hi Brad,

Thanks for sharing this interesting article highlighting the differences in major hospital rating systems. While their methodologies may differ, I think the way forward would be to standardize and validate metrics across these rating organizations. This would require transparency on their part with regard to the data collection and statistical analyses, but would ultimately help generate meaningful reports that could be used by all stakeholders including health systems, patients and families, and clinicians.

Rupesh.